Lenovo WA5480G3 8-GPU AI/Compute Server Configuration

- Ürün Kategorileri:Sunucular

- Parça numarası:Lenovo WA5480G3

- Mevcudiyet:In Stock

- Durum:Yeni

- Ürün özellikleri:Gönderilmeye Hazır

- Min sipariş:1 birim

- Liste Fiyatıydı:$349,999.00

- Fiyatınız: $320,629.00 Kazancınız $29,370.00

- Şimdi Sohbet Et E-posta gönder

Rahatlayın. İade kabul edilir.

Kargo: Ürünlerin uluslararası gönderimi, gümrük işlemleri ve ek ücretlere tabi olabilir. Detayları gör

Teslimat: Uluslararası teslimat gümrük işlemlerine tabi olursa, lütfen ek süre ayırın. Detayları gör

İadeler: 14 gün içinde iade. Satıcı, iade kargo ücretini öder. Detayları gör

ÜCRETSİZ Kargo. NET 30 Gün Satın Alma Siparişlerini kabul ediyoruz. Kredi durumunuzu etkilemeden saniyeler içinde karar alabilirsiniz.

Eğer büyük miktarda Lenovo WA5480G3 ürünü almanız gerekiyorsa - Whatsapp: (+86) 151-0113-5020 numaramız üzerinden bizi arayın veya canlı sohbetten teklif talebinde bulunun ve satış yöneticimiz kısa süre içinde sizinle iletişime geçecektir.

Lenovo WA5480G3 8-GPU AI/Compute Server Configuration

Keywords

Lenovo WA5480G3, 8 GPU server, Xeon Gold 6530, 768 GB DDR5 ECC RAM, NVMe storage array, PERC H755 RAID, Nvidia H200 NVL, ConnectX-7 400G, AI training server

Description

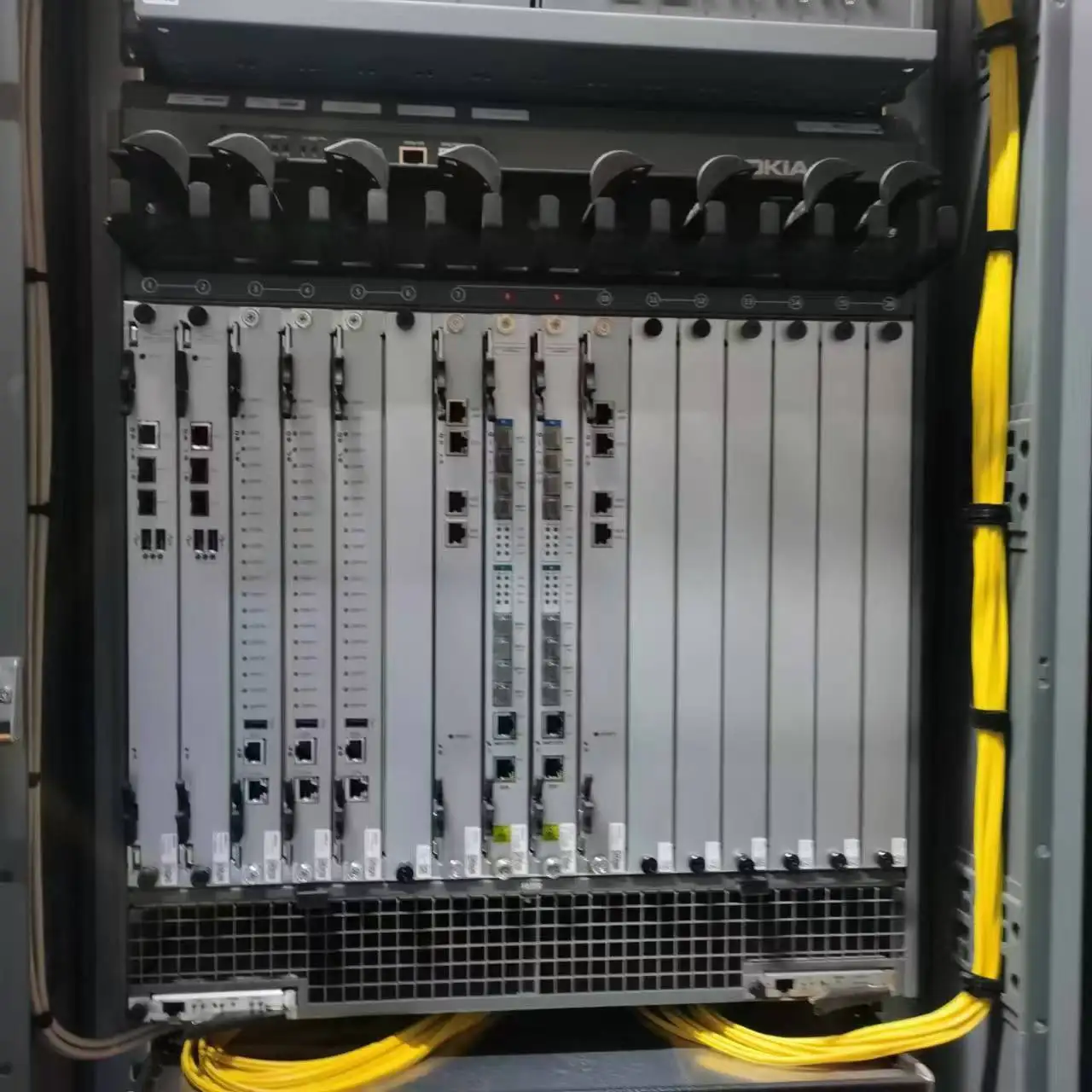

The Lenovo WA5480G3 is a high-density 4U AI / compute server engineered for demanding workloads like model training, deep learning inference, and large data analytics. This variant supports **8 × Nvidia H200 NVL GPUs**, coupled with a balanced CPU and storage architecture to avoid bottlenecks and maintain maximum throughput.

At its core, it houses dual **Intel® Xeon® Gold 6530** processors, offering a high core count and frequency to feed GPU workloads. With **32 modules of 64 GB DDR5 ECC memory** (totaling 2,048 GB), the system ensures abundant capacity for GPU-resident datasets, caching, and model parameters.

Storage is robust: **24 × 7.68 TB NVMe SSDs** for ultra-fast direct I/O, plus a **2 × 480 GB M.2 SSD** array in RAID mode for OS, boot, and metadata. Networking is handled by **Nvidia ConnectX-7 400 Gbps** for high-speed GPU interconnect or data egress, and additional 4 × 1 GbE ports. Redundant **4 × 2700 W power supplies** guarantee continuous operations even under heavy GPU loads.

This configuration is ideal for training large neural networks, handling massive batch inferencing, or running hybrid workloads where both CPU and GPU cycles are critical. The system is designed for maximum GPU density while preserving I/O, memory, and power balance across the platform.

Key Features

- Dual Intel Xeon Gold 6530 processors for compute and coordination

- 2,048 GB (32 × 64 GB) DDR5 ECC memory, enabling large dataset caching

- 24 × 7.68 TB NVMe drives for high throughput and low latency storage

- 2 × 480 GB M.2 SSDs in RAID for OS / boot / metadata performance

- 8 × Nvidia H200 NVL GPUs (Part Number 900-21010-0040-000), optimized for AI training and inference

- 4 × Nvidia 2-Way NVLink bridges (900-53651-0000-000) to connect GPUs with high bandwidth

- Single-port 400 Gbps ConnectX-7 NIC (MCX75510AAS-NEAT) for GPU / data path connectivity

- 4 × 1 GbE PCIe network ports for management and control traffic

- Redundant 4 × 2700 W power supplies for failover and sustained power delivery

- Rail kit support for 4U rack mounting and serviceability

Configuration

| Component | Specification / Part Number |

|---|---|

| Model / Chassis | Lenovo WA5480G3 (4U, GPU AI platform) |

| Processor | 2 × Intel Xeon Gold 6530 |

| Memory | 32 × 64 GB DDR5 ECC (2,048 GB total) |

| NVMe Storage | 24 × 7.68 TB NVMe SSD |

| M.2 SSD (RAID) | 2 × 480 GB M.2 SSD in RAID |

| GPU Accelerators | 8 × Nvidia H200 NVL (PN 900-21010-0040-000) |

| NVLink Bridges | 4 × Nvidia 2-Way NVLink (PN 900-53651-0000-000) |

| High-Speed NIC | 1 × Nvidia ConnectX-7 400 Gbps (MCX75510AAS-NEAT) |

| Ethernet Ports | 4 × 1 GbE via PCIe |

| Power Supplies | 4 × 2700 W redundant PSU |

| Accessories | Rail Kit, cabling, GPU risers, cooling system |

Compatibility

The Lenovo WA5480G3 is marketed in Lenovo’s “WenTian / Qtian” AI server line, built to support 4th Gen or 5th Gen Intel Xeon Scalable platforms.

The system supports PCIe Gen5 expansion suitable for modern GPUs and accelerators.

Lenovo has certified this platform for multiple operating systems and AI stacks.

Common supported OS include Red Hat Enterprise Linux, SUSE Linux Enterprise, Ubuntu, and virtualization/hypervisor systems.

The NVMe storage subsystem and GPU interconnects are compatible with VMD, PCIe switch architectures, and GPU direct access paths. The ConnectX-7 400G NIC supports modern RDMA and high throughput networking needs.

Software frameworks including CUDA, ROCm (if supported), TensorFlow, PyTorch, and distributed training frameworks (e.g. NVIDIA NCCL, Horovod) are expected to interoperate on this architecture with proper driver support.

Usage Scenarios

This 8-GPU configuration is ideal for **AI / deep learning model training** tasks, especially those requiring large memory and high GPU throughput concurrently. The dual CPU setup helps coordinate GPU workloads while feeding data pipelines efficiently.

It’s also suited for **hybrid inference + training clusters**, where portions of the workload run on CPU (pre-processing, orchestration) and the heavy lifting is done by GPUs. The 400 Gbps NIC enables high bandwidth data distribution across nodes or storage systems.

Use cases such as **large language model fine-tuning**, **computer vision training with high dimensionality**, **reinforcement learning workloads**, and **multi-tenant AI services** will benefit from this design.

The system is also applicable in **HPC / simulation workloads** that can leverage GPU acceleration, or **data analytics pipelines** that combine CPU and GPU compute stages in a single server node.

Frequently Asked Questions (FAQs)

1. Can this Lenovo WA5480G3 configuration support PCIe bifurcation or switch topologies for the 8 GPUs?

Yes. The WA5480G3 is built to support multiple expansion topologies including PCIe switch or direct CPU lanes, enabling flexible GPU interconnect strategies with NVLink or PCIe bridges.

2. How do I optimize storage performance when using 24 NVMe drives?

You can configure NVMe arrays as JBOD, RAID via software (e.g. RAID5/6, erasure coding), or employ caching layers. The SSDs or M.2 RAID system help host boot volumes or metadata, leaving NVMe drives dedicated to data I/O.

3. What cooling and power considerations are necessary with 8 GPUs and 4 × 2700 W supplies?

This server will require robust airflow and thermal design. The redundant 2700 W supplies ensure headroom under maximum GPU load. Ensure rack airflow, hot-aisle/cold-aisle alignment, and power distribution are sized for >10 kW per node.

4. Which frameworks and libraries are supported on this hardware?

The system supports CUDA, cuDNN, NCCL for GPU computing, and distributed AI frameworks such as TensorFlow, PyTorch, Horovod, and MXNet. The ConnectX-7 NIC supports RDMA for efficient inter-node communication. Ensure you use up-to-date drivers and libraries matching kernel and firmware versions.

BU ÜRÜNLE İLGİLİ ÜRÜNLER

-

HPE ProLiant DL385 Gen11 GPU CTO Server with 8 × N... - Parça numarası: HPE ProLiant DL385 G...

- Mevcudiyet:In Stock

- Durum:Yeni

- Liste Fiyatıydı:$129,999.00

- Fiyatınız: $106,365.00

- Kazancınız $23,634.00

- Şimdi Sohbet Et E-posta gönder

-

Optimized Dell PowerEdge R760 with Dual Xeon 6548Y... - Parça numarası: PowerEdge R760...

- Mevcudiyet:In Stock

- Durum:Yeni

- Liste Fiyatıydı:$23,000.00

- Fiyatınız: $19,800.00

- Kazancınız $3,200.00

- Şimdi Sohbet Et E-posta gönder

-

Lenovo WA5480G3 8-GPU AI/Compute Server Configurat... - Parça numarası: Lenovo WA5480G3...

- Mevcudiyet:In Stock

- Durum:Yeni

- Liste Fiyatıydı:$349,999.00

- Fiyatınız: $320,629.00

- Kazancınız $29,370.00

- Şimdi Sohbet Et E-posta gönder

-

High-Density Dell PowerEdge R760 Server | 24-Bay N... - Parça numarası: Dell PowerEdge R760 ...

- Mevcudiyet:In Stock

- Durum:Yeni

- Liste Fiyatıydı:$19,999.00

- Fiyatınız: $18,643.00

- Kazancınız $1,356.00

- Şimdi Sohbet Et E-posta gönder

-

Dell PowerEdge R740xd Sunucu - Parça Numarası 210-... - Parça numarası: R740xd Server...

- Mevcudiyet:In Stock

- Durum:Yeni

- Liste Fiyatıydı:$14,999.00

- Fiyatınız: $13,958.00

- Kazancınız $1,041.00

- Şimdi Sohbet Et E-posta gönder

-

Dell PowerEdge R640 Çift Xeon Gold 5218R, 768 GB D... - Parça numarası: Dell PowerEdge R640...

- Mevcudiyet:In Stock

- Durum:Yeni

- Liste Fiyatıydı:$5,499.00

- Fiyatınız: $4,325.00

- Kazancınız $1,174.00

- Şimdi Sohbet Et E-posta gönder

-

Dell PowerEdge R660 Çift Xeon Altın 6542Y | 64 GB ... - Parça numarası: Dell PowerEdge R660...

- Mevcudiyet:In Stock

- Durum:Yeni

- Liste Fiyatıydı:$19,999.00

- Fiyatınız: $16,329.00

- Kazancınız $3,670.00

- Şimdi Sohbet Et E-posta gönder

-

Dell PowerEdge R660 Çift Xeon Gold 6526Y, 64 GB DD... - Parça numarası: Dell PowerEdge R660...

- Mevcudiyet:In Stock

- Durum:Yeni

- Liste Fiyatıydı:$9,999.00

- Fiyatınız: $8,700.00

- Kazancınız $1,299.00

- Şimdi Sohbet Et E-posta gönder